The hypothetico-deductive diagnostic method involves collecting clinical data that could serve as determinants of diagnosis, prognosis and response to treatment. The clinical data collected through subjective and objective assessments could vary between clinicians. So, it is important to collect reproducible and reliable information from the patients to form a hypothesis and make a diagnosis.

During a routine examination, a large volume of clinical data is generated. To collect the reproducible and reliable information from the patients, we need to conduct experiments to measure the agreement between examiners (1-3).

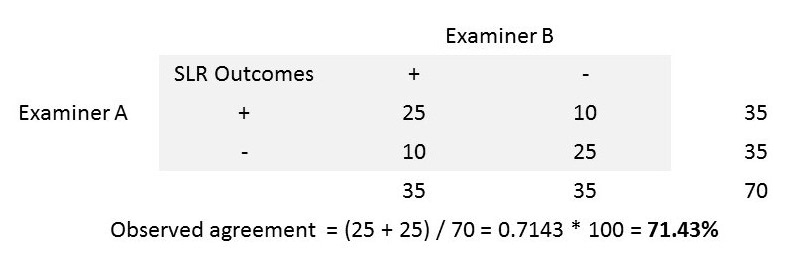

Step 1: Let us examine the outcome of a single leg raise test from two examiners to calculate the actual agreement between them.

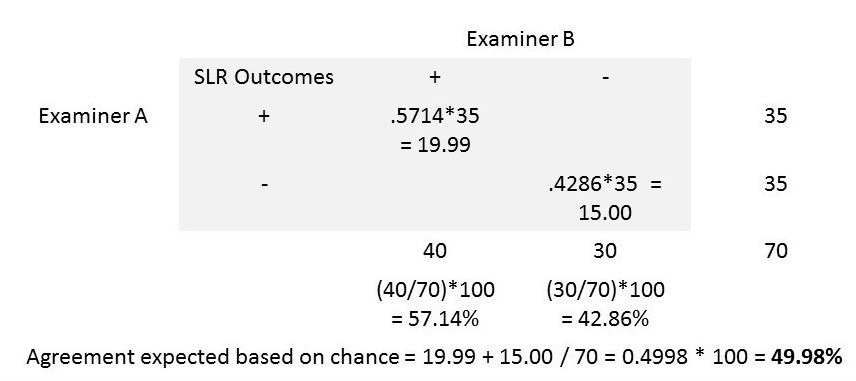

Step 2: Now, let us find if the agreement between these two examiners occurred by random chance. We can identify such random chance agreement by assuming one of the clinicians was tossing a coin and making his/her decisions. Let us assume the examiner ‘B’ tossed a coin and had 40 heads (SLR +ve) and 30 tails (SLR -ve).

By random chance, we can expect 57.14% (ie., 40/70 * 100) of the 35 SLR results reported as ‘+’ by examiner ‘A’ to be ‘+’ be examiner ‘B’ as well. We can also expect 42.86% (ie., 30/70 * 100) of the 35 results reported as ‘-‘ by examiner ‘A’ to be ‘-‘ by examiner ‘B’ as well.

The agreement between the two examiners expected based on random chance can now be calculated as (19.99 + 15.00) / 70 = 0.4998 or 49.98%.

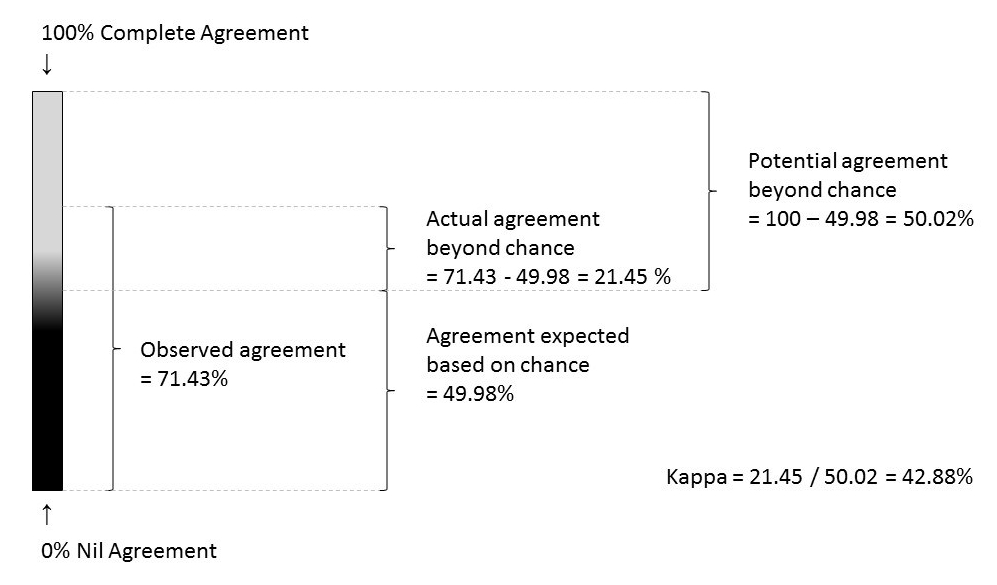

Step 3: The comparison of observed agreement (71.43% in step 1) and the agreement expected based on random chance (49.97% in step 2) will give us a clinically useful index called ‘kappa degree of agreement’ (4).

kappa = actual agreement beyond chance / potential agreement beyond chance

In our step 1, the actual agreement beyond chance = 71.43 – 49.98 = 21.45 %

In our step 2, the potential agreement beyond chance = 100 – 49.98 = 50.02 %

kappa = 21.45 / 50.02 = 0.4288

Hence, the proportion of potential agreement between two examiners beyond chance (kappa) was 0.43

The kappa values can be categorised for meaningful interpretation as follows:

< 0 Less than chance agreement

0.01–0.20 Slight agreement

0.21– 0.40 Fair agreement

0.41–0.60 Moderate agreement

0.61–0.80 Substantial agreement

0.81–0.99 Almost perfect agreement

Issue of Clinical Disagreement: Additionally, the extent of disagreement between the examiners is also important as it is prevalent in every step of our clinical care. For example, when a patient visits a care provider for the first time to consult about his/her knee pain, the clinician listens to the patient and documents the symptoms. The disagreement between the clinicians starts at this very first step. If the patient consults two clinicians separately on the same day, there will be differences in the clinical data collected between these two clinicians.

From this point onwards, the hypotheses generation, differential diagnoses, treatment options, referrals, follow-up care and decision on discharge from further treatment will differ (to a certain degree) between these two clinicians. As long as the care provider and the recipient are biologic beings, there will always be a certain degree of subjectiveness in our current model of health care.

The main reasons for clinical disagreement include the following:

- Biologic variation in the presenting symptoms/signs

- Biologic variation of the clinician’s sensory-motor ability to observe the presenting symptoms/signs

- The ambiguity of the presenting symptom/sign being observed

- Clinician’s biases (eg., influence of gestalt patterns, cultural differences etc.,)

- Faulty application of clinical data collection methods (eg., off on a wrong track during history taking)

- Clinician’s incompetence or carelessness

We can apply certain strategies to prevent or reduce the possibility of clinical disagreement. They are:

- define the observed symptoms/signs clearly

- define the technique of observation clearly

- master the technique of observation

- avoid the biases consciously

- seek for a standardised environment while performing clinical observation/tests

- seek for blinded opinions from your colleagues and corroborate your findings

- use refined, highly calibrated tools for measurement (eg., VAS in mm)

- document your findings using standardised (between clinicians) format

Weighted kappa: The standard kappa statistic measures the degree of agreement between two clinicians but does not account for the degree of disagreement. However, the nice property of kappa statistic is that we can give different weights to the disagreements depending on the magnitude, i.e., the higher the disagreement between clinicians, the higher the weights assigned.

Warrens (5) reports that there are eight different weighting schemes available for 3 x 3 categorical tables. An appropriate weighting scheme can be chosen by considering the type of categorical scale used to measure the degree of agreement between the clinicians. The weighted kappas proposed by Cicchetti (6) and Warrens (7) can be used for dichotomous ordinal scale data. And, the linearly and quadratically weighted kappas can be used for continuous ordinal data (5).

The most commonly used and criticised one is the weighted Cohen’s kappa for nominal categories (5). The quadratically weighted kappa is also popular as it produces higher values (5). A judicious use of the weighting schemes is recommended to avoid wrong decisions in clinical practice (5).

References:

- Sackett, David L & Sackett, David L. Clinical epidemiology (1991). Clinical epidemiology : a basic science for clinical medicine (2nd ed). Little, Brown, Boston, MA

- Fletcher, R.H., Fletcher, S.W. and Fletcher, G.S., 2012. Clinical epidemiology: the essentials. Lippincott Williams & Wilkins.

- McGee, S., 2012. Evidence-based physical diagnosis. Elsevier Health Sciences.

- Viera, A.J. and Garrett, J.M., 2005. Understanding interobserver agreement: the kappa statistic. Fam Med, 37(5), pp.360-363.

- Warrens, M.J., 2013. Weighted Kappas for Tables. Journal of Probability and Statistics, 2013.

- Cicchetti, D.V., 1976. Assessing inter-rater reliability for rating scales: resolving some basic issues. The British Journal of Psychiatry, 129(5), pp.452-456.

- Warrens, M.J., 2013. Cohen’s weighted kappa with additive weights. Advances in Data Analysis and Classification, 7(1), pp.41-55.